AI Tech Stack Copilot

AI-Powered Architecture Guidance,

On Demand

Voices from the Frontline

FAQ's

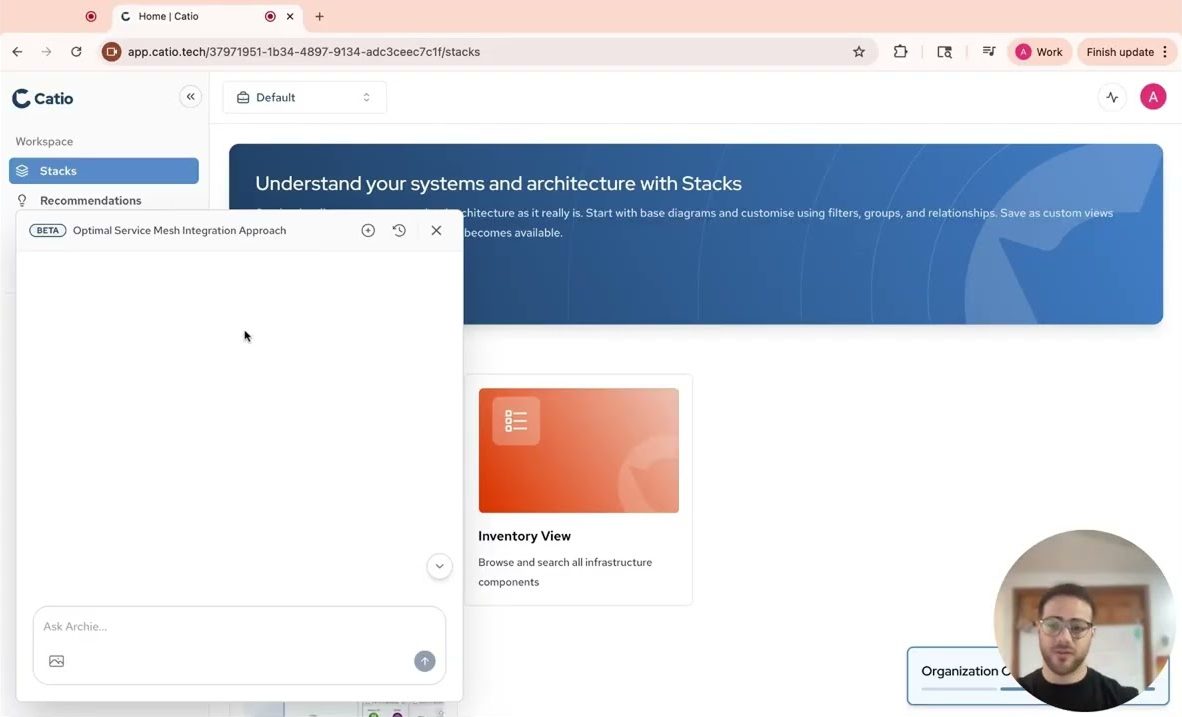

Archie is a conversational copilot with key capabilities:

- Natural language architecture queries: Users can ask questions about their architecture in plain English and get contextual responses

- Real-time architecture understanding: Archie has "full domain understanding of your architecture" and requirements, so it can provide specific insights rather than generic responses

- On-demand expertise: Provides "next level insight across the architecture that's on demand" for any team member's specific context

- Architecture-specific learning: Allows architects to "learn and introspect your existing systems at scale" and get recommendations for immediate, practical issues

Archie integrates deeply with Catio's other modules:

- Stacks integration: Has full understanding of the live architecture digital twin, so responses are grounded in actual infrastructure data

- Requirements module: Understands your governance standards and regulatory requirements, incorporating them into responses

- Recommendations module: Can surface and explain existing recommendations, helping users understand why certain changes are suggested

- Cross-module navigation: The demo mentioned that responses can help users "navigate to that part of your tech stack to learn more"

Catio integrates with major cloud platforms (AWS, Azure, GCP), developer and collaboration tools (GitHub, Jira, Confluence, Notion), and identity providers (Okta, Azure AD, SSO/SAML/LDAP). Exports are available in JSON/YAML, PDF, and image formats to fit seamlessly into your current workflows.

Yes. Catio allows you to snapshot your current architecture state, model proposed changes, and simulate their impact in a safe environment. Teams can validate outcomes, compare states, and reduce risk before committing to production.